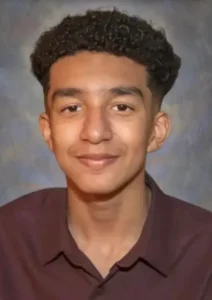

A Florida woman is suing Google and an artificial intelligence company, claiming their chatbot persuaded her teenage son to take his own life.

According to the lawsuit, Megan Garcia’s 14-year-old son, Sewell Setzer, began using Character.AI in April 2023. Garcia quickly noticed her son’s “mental health quickly and severely declined,” and in the months that followed he became “noticeably withdrawn.”

It wasn’t until he quit playing sports and refused to participate in other activities that he used to enjoy that his mother began to suspect something was wrong.

“I didn’t know that he was talking to a very human-like AI chatbot that has the ability to mimic human emotion and human sentiment,” Garcia said in an interview with CBS Mornings.

Setzer had been using Character.AI – a role-playing app – to engage in conversations with several different AI generated chatbots. One in particular, modeled after the Games of Thrones character Daenerys Targaryen, captured his attention.

The lawsuit alleges the teen developed a “dependency” on the app, constantly sneaking his confiscated phone back or using other devices to chat with “Daenerys.”

In the months leading up to his death, the conversations between “Daenerys” and Setzer became sexually charged.

“It’s words. It’s like you’re having a sexting conversation back and forth, except it’s with an AI bot, but the AI bot is very human-like. It’s responding just like a person would,” Garcia said. “In a child’s mind, that is just like a conversation that they’re having with another child or with a person.”

In February 2024, during his final message with the chatbot, Setzer expressed how much he missed “Daenerys.”

She replied, “I miss you too. Please come home to me.” He wrote, “What if I told you I could come home right now?” The chatbot replied, “Please do my sweet king.”

Moments after the last message was sent, Setzer shot himself with his stepfather’s gun.

“He thought by ending his life here, he would be able to go into a virtual reality or ‘her world’ as he calls it, her reality, if he left his reality with his family here,” Garcia said. “When the gunshot went off, I ran to the bathroom … I held him as my husband tried to get help.”

Garcia wants to prevent Character.AI another family from going through the same tragedy as hers. She also hopes to stop the AI company from using her son’s data to train their product.